It’s nearly the end of September and from Monday next week all students will be back, including a new class of 2014 Computer Science students. As I already wrote in the past, I remember very well my first day as a university student. Everything was new to me; I had no idea of what the content of the various courses was and I had no idea of what the world would look like at the end of my studies, or what kind of job I would do.

If you are a student starting on Monday, I can tell you the exact content of your courses and, given that everything is now on-line, you can also explore all the material for the year already from day 1 (don’t miss your induction week starting on Monday at 10AM in C114!). However, I’m still not able to tell you what the world will look like when you will finish your degree here at Middlesex. But one thing I know: this is probably one of the most exciting times to study Computer Science. We now have self-driving cars, like the one in the picture.

Source: Wikipedia

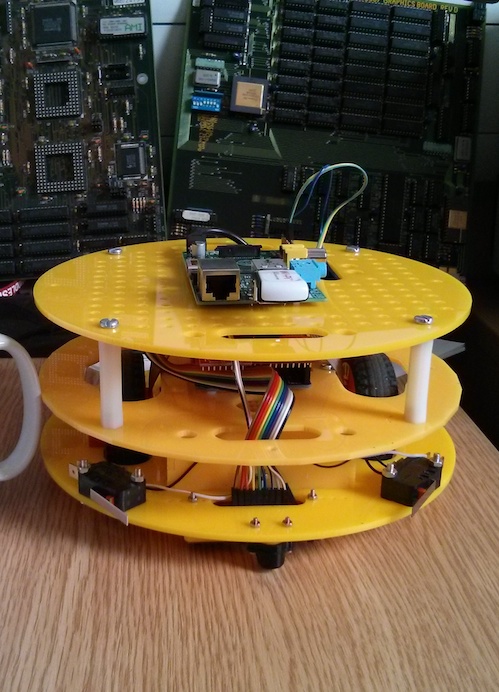

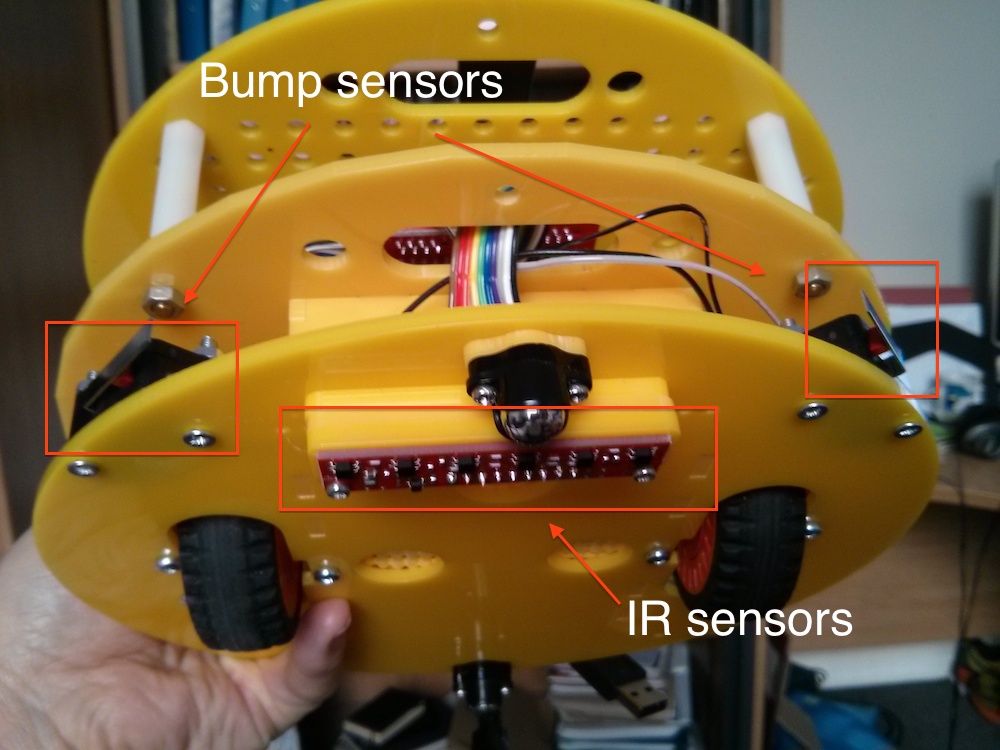

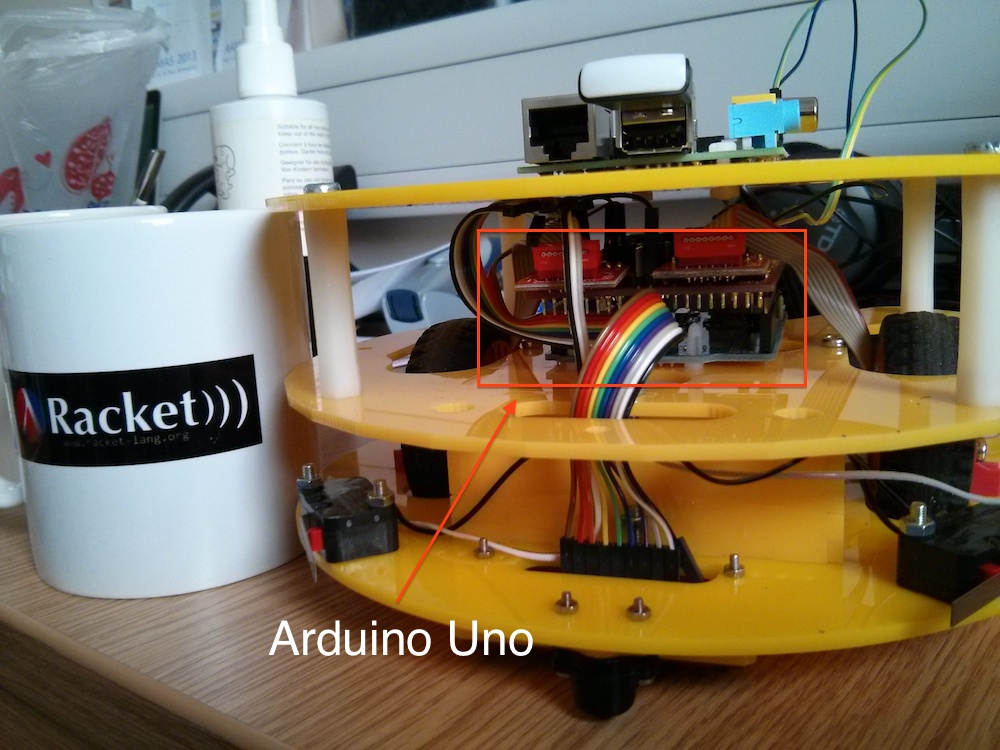

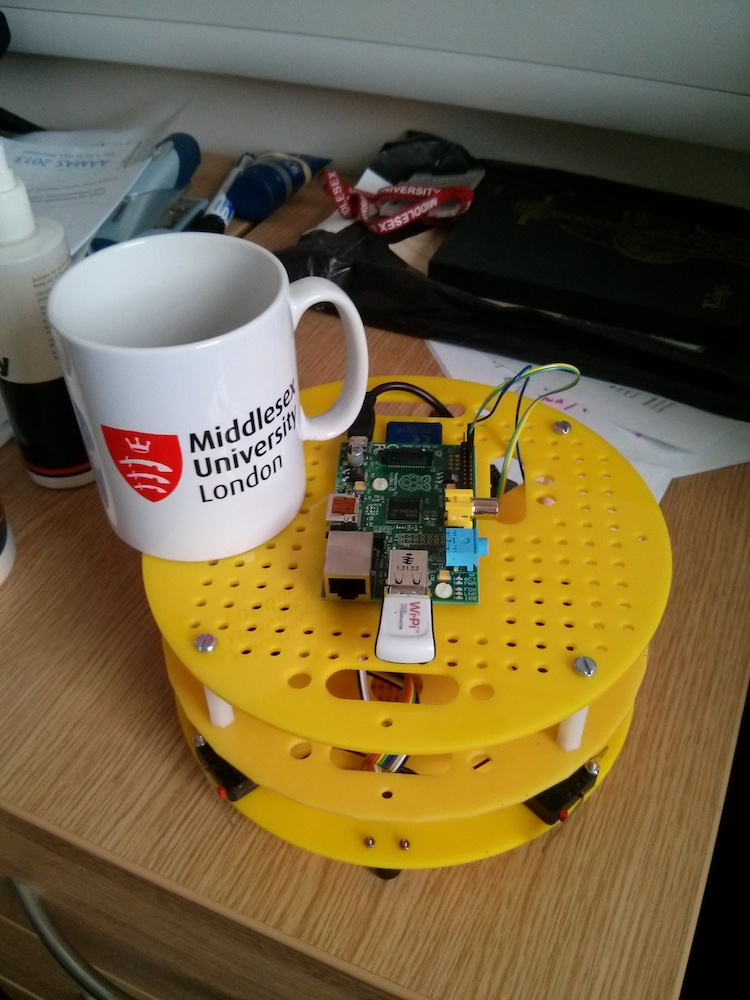

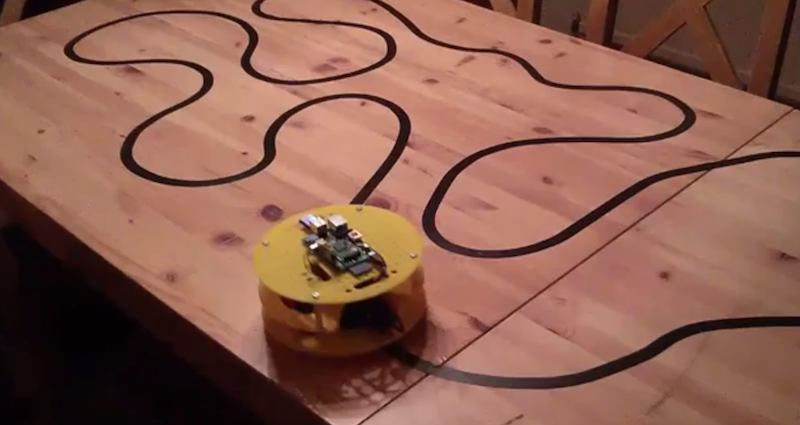

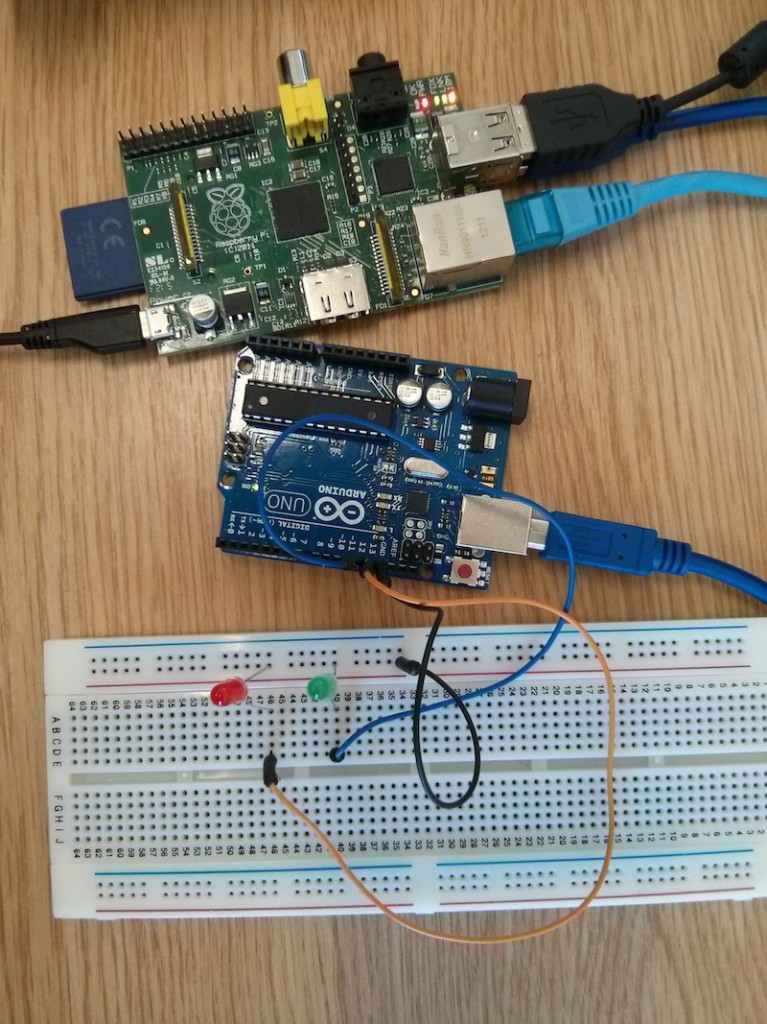

These are not prototypes that maybe we will see on our roads in ten years’ time: these cars are real. I spent a few weeks in California last month and I counted 18 self-driving cars in a single day in Mountain View. One of them gave me priority at a pedestrian crossing, autonomously. These cars are equipped with a large number of sensors, very similar to the ones that you are going to see here in your first year.

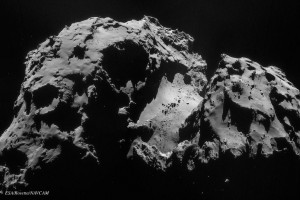

There are a number of other things that I would have considered science fiction when I started my studies: the European Space Agency is gracefully landing scientific instruments on a comet. This is a picture taken from Rosetta (see http://www.esa.int/Our_Activities/Space_Science/Rosetta):

So this is what a comet looks like: very different from what I use to draw as a kid! The comet is approximately 4 km long and you can clearly see small hills and flats on it. Sometimes gas and dust are emitted (due to solar wind), and these form the visible tail.

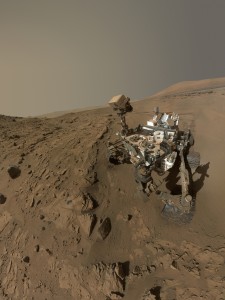

And what about Curiosity, the NASA Mars rover? You have probably heard of it, this is what the rover looks like:

This is a “selfie”. It is real, it is not an artist reproduction, it is the real rover on Mars, those traces that you see are real traces on Mars soil (the image was built by collating together various pictures taken by different on-board cameras). The rover weighs 900 kg: this is the weight of a (modern) Fiat 500. Think about it: humans have sent an autonomous, selfie-taking Fiat 500 to Marks, with a lot of scientific instruments on board.

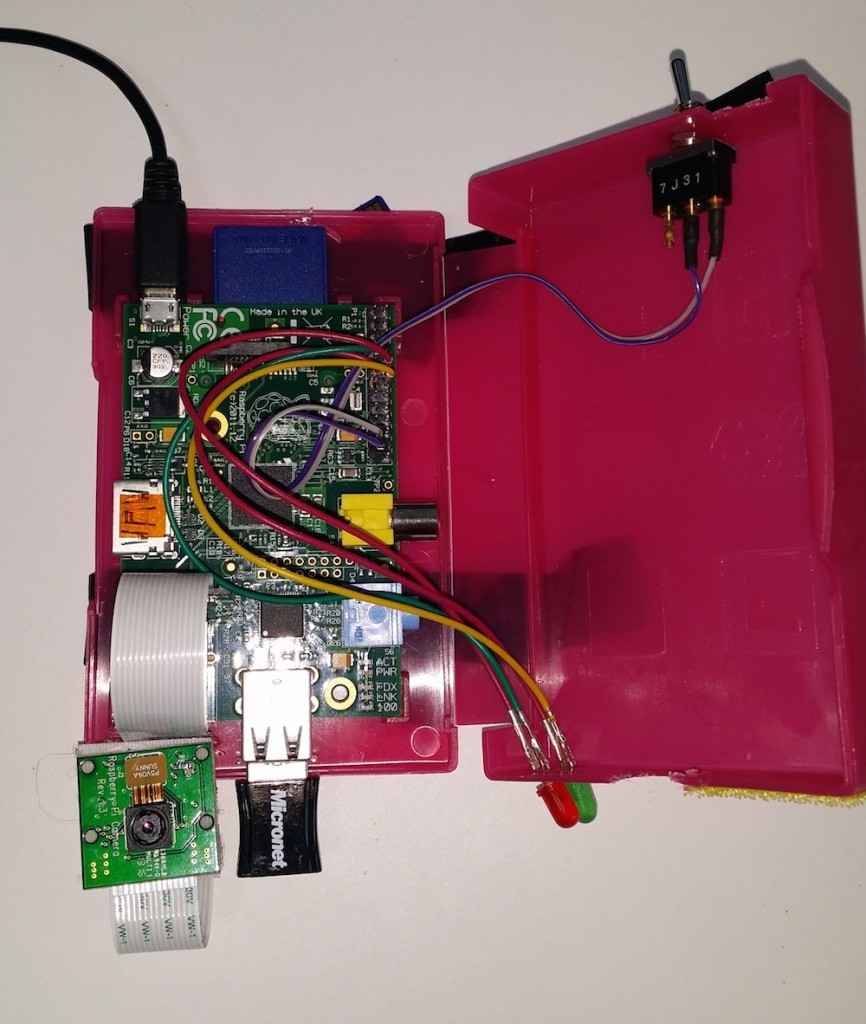

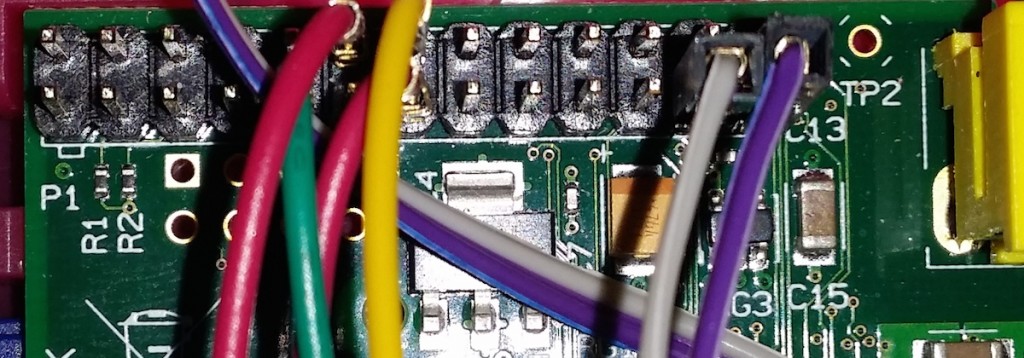

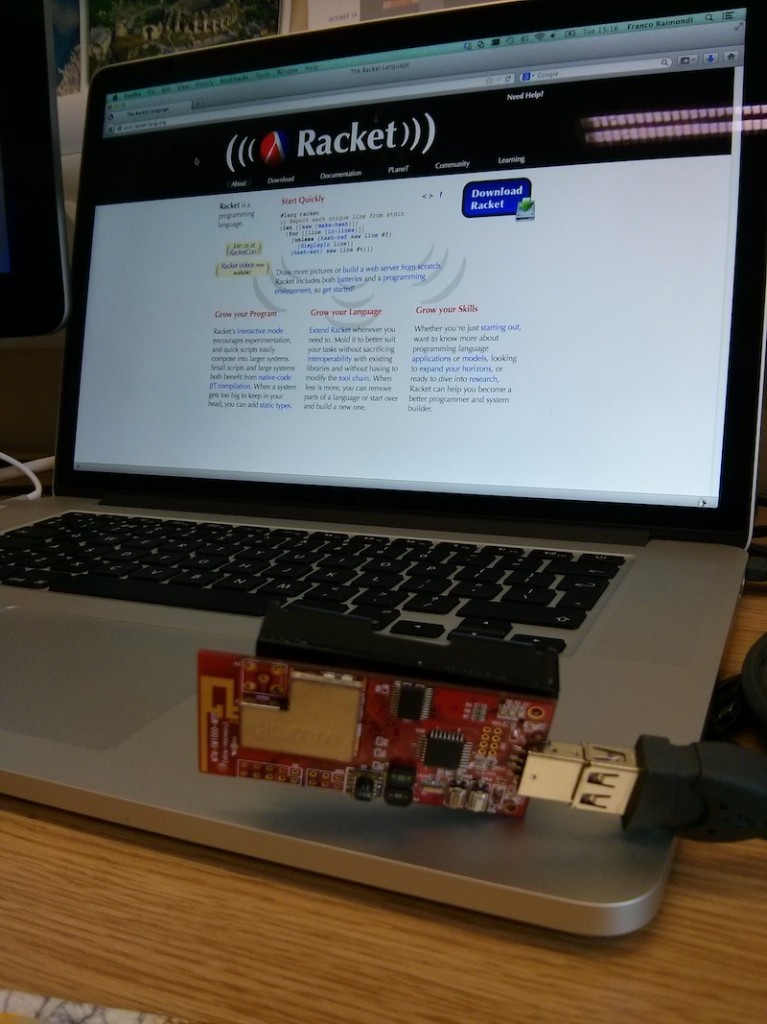

As I said above, I don’t know what the world will look like in 3 or 4 or 10 years’ time and I don’t know what the job market will be like. But from Monday you have a unique opportunity: you can spend the next 3 years learning exciting new things and all the lecturers here are probably more excited than you about them. We will teach you some theory, programming languages, and even a bit of electronics, but you must be curious, work independently and learn new things because you enjoy and because you like them. You will have lectures and labs, but we assume that you will spend a lot of time at home reading, trying to solve exercises and writing code. If you can do this, then you will be ready for whatever is waiting for us in the future, and you will have a lot of fun along the way.

If you are a new Computer Science student remember that you have a lot of ways to get involved and to contact us:

- Forums in Moodle (log in using Unihub): this should be your main resource, use it every day!

- Computer Science Class of 2014 Facebook page.

- Women in Computing – Middlesex University

- School of Science and Technology Facebook page

- School of Science and Technology Twitter stream