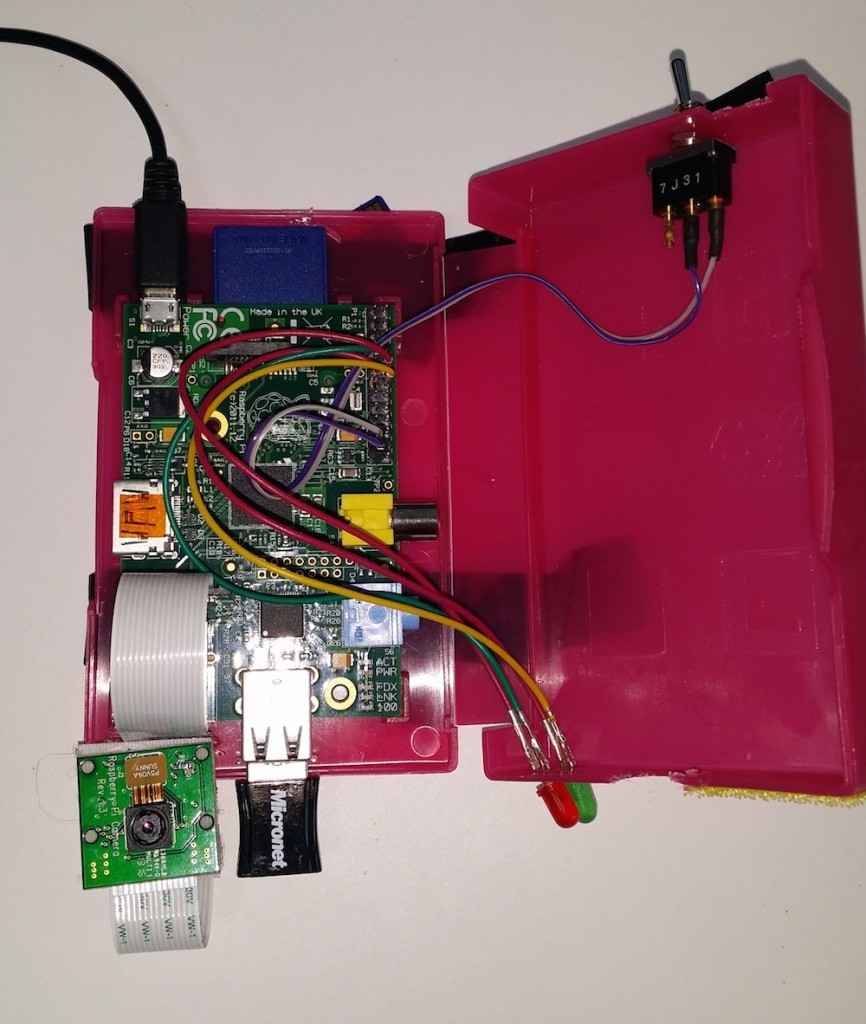

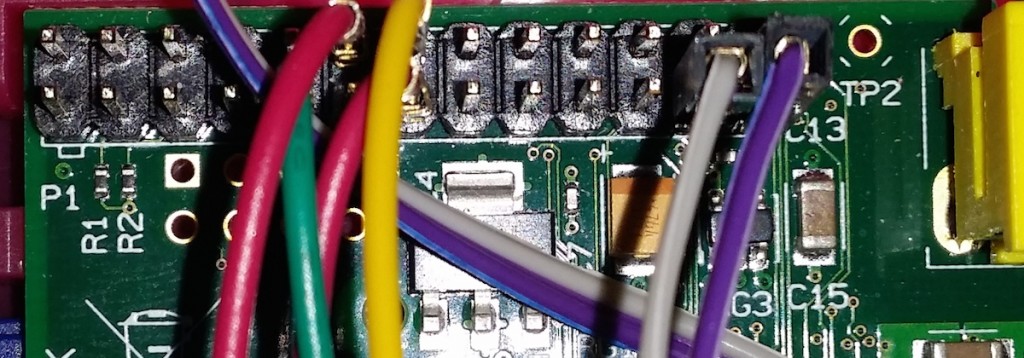

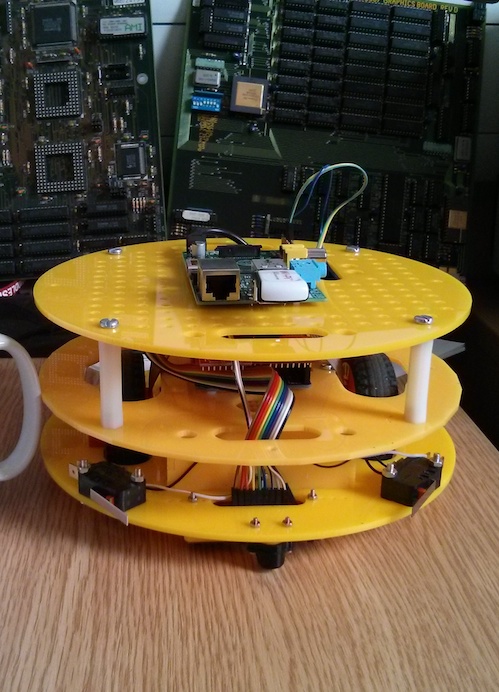

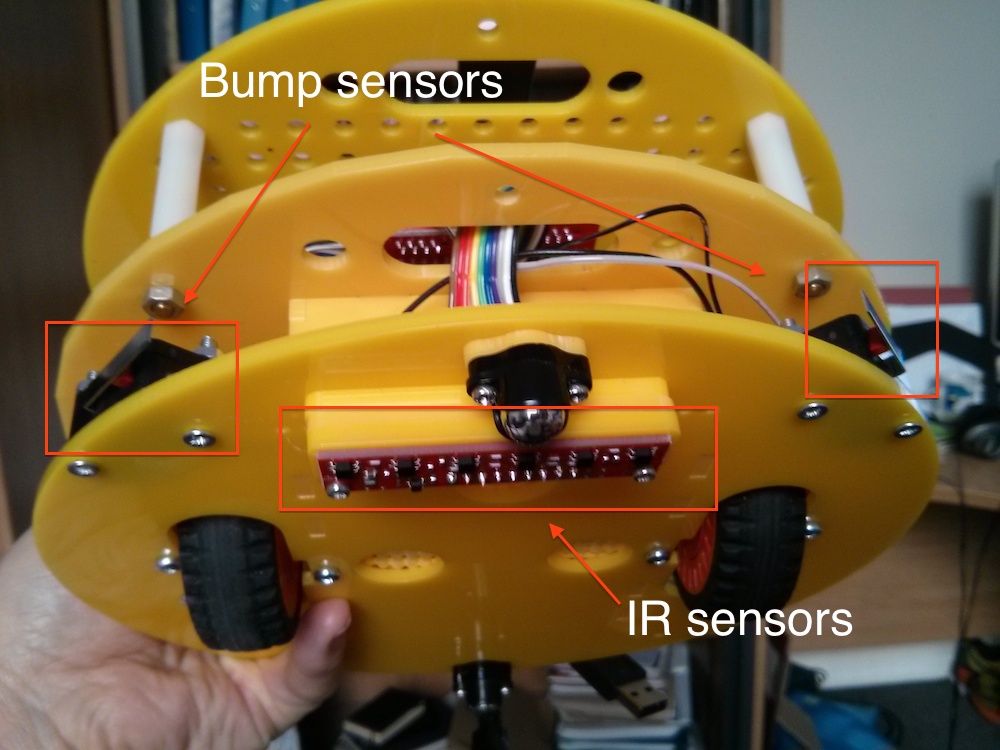

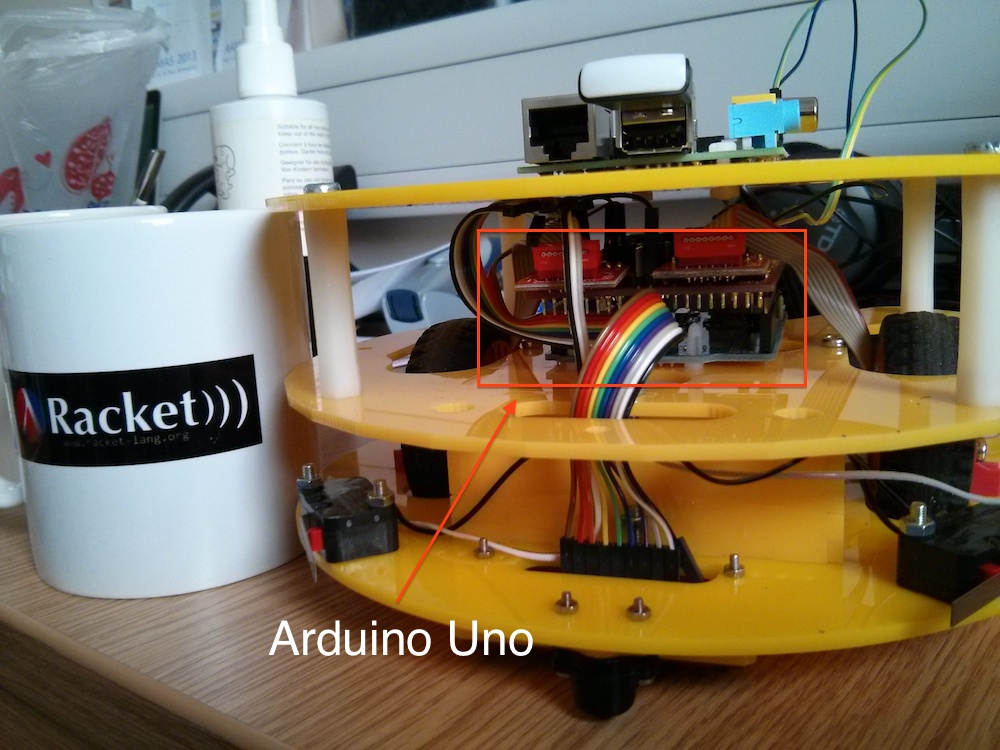

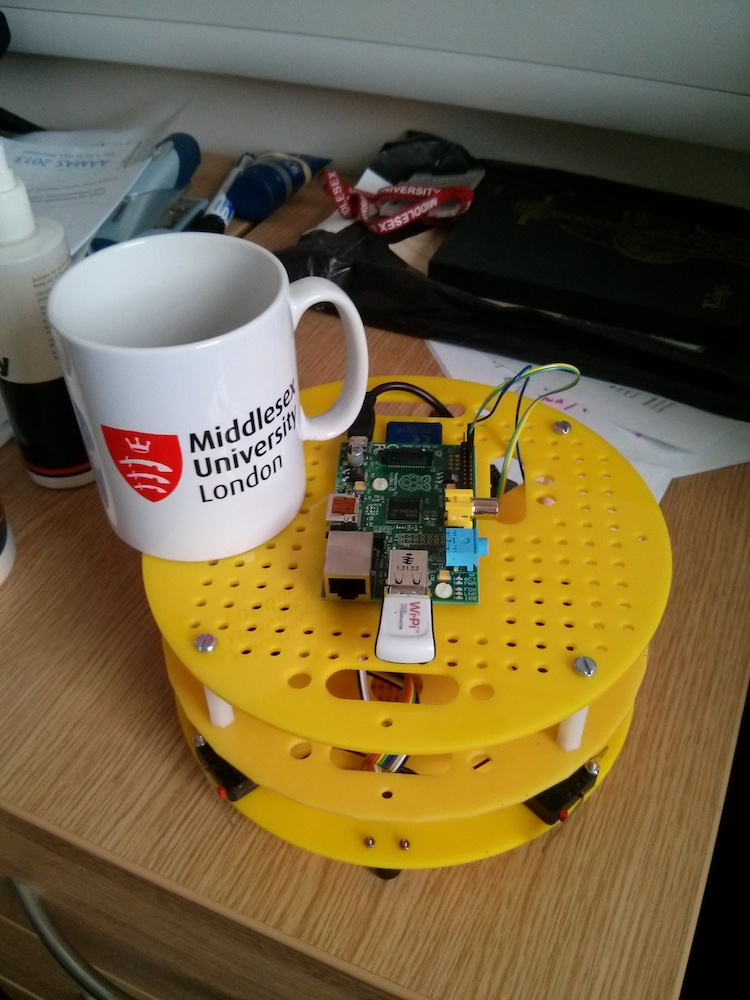

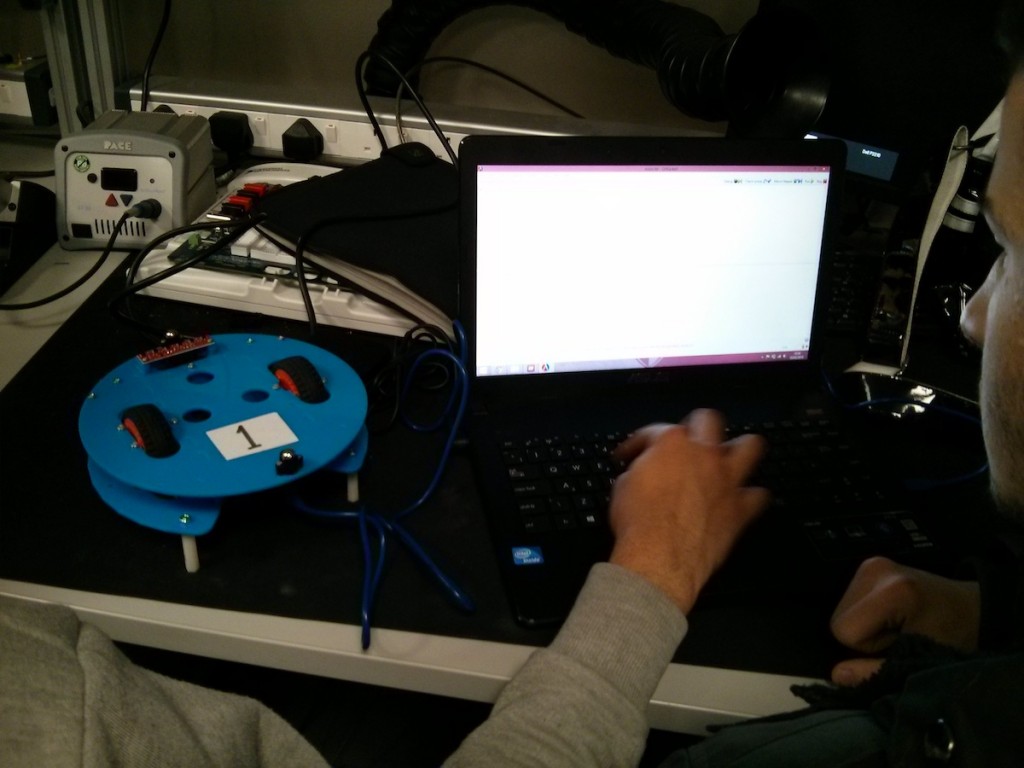

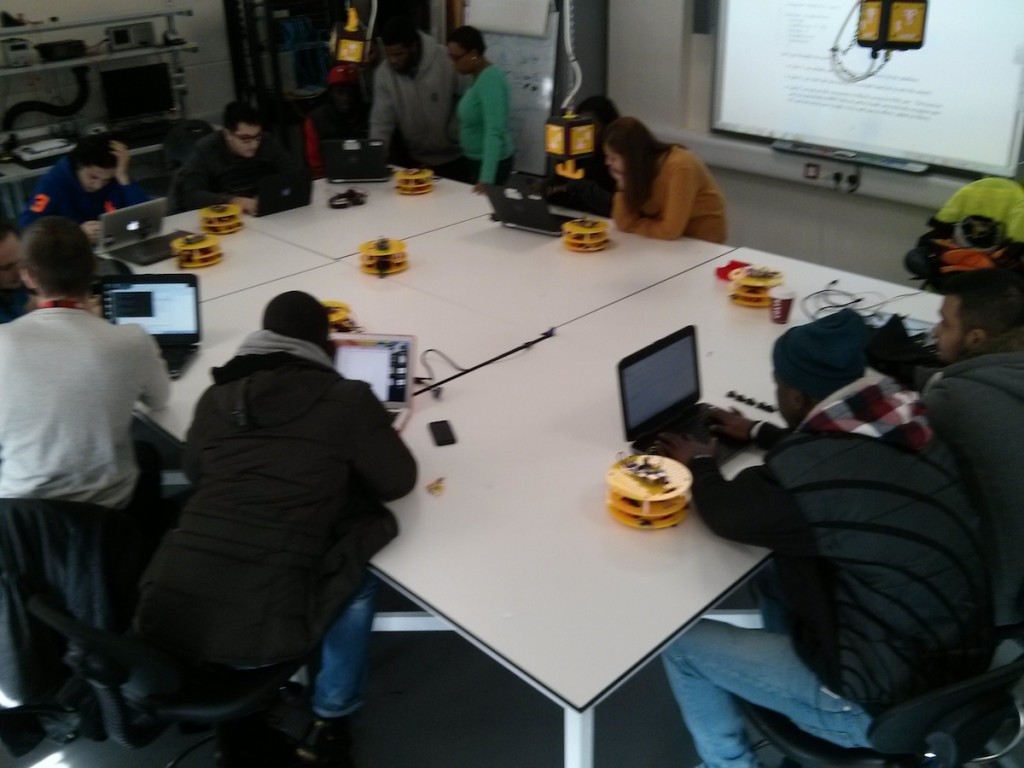

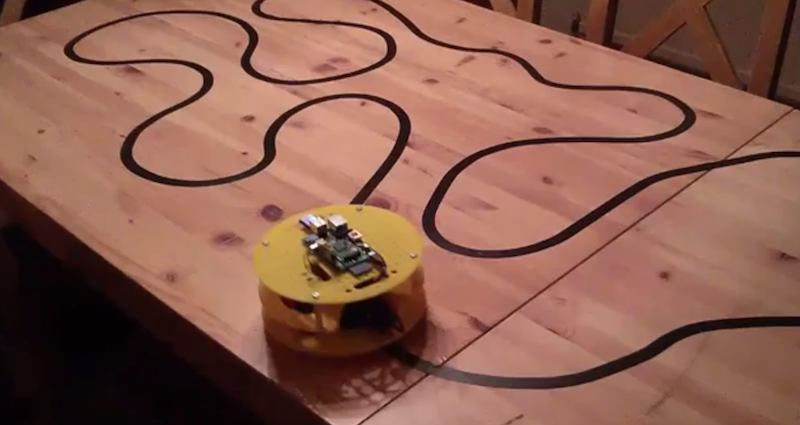

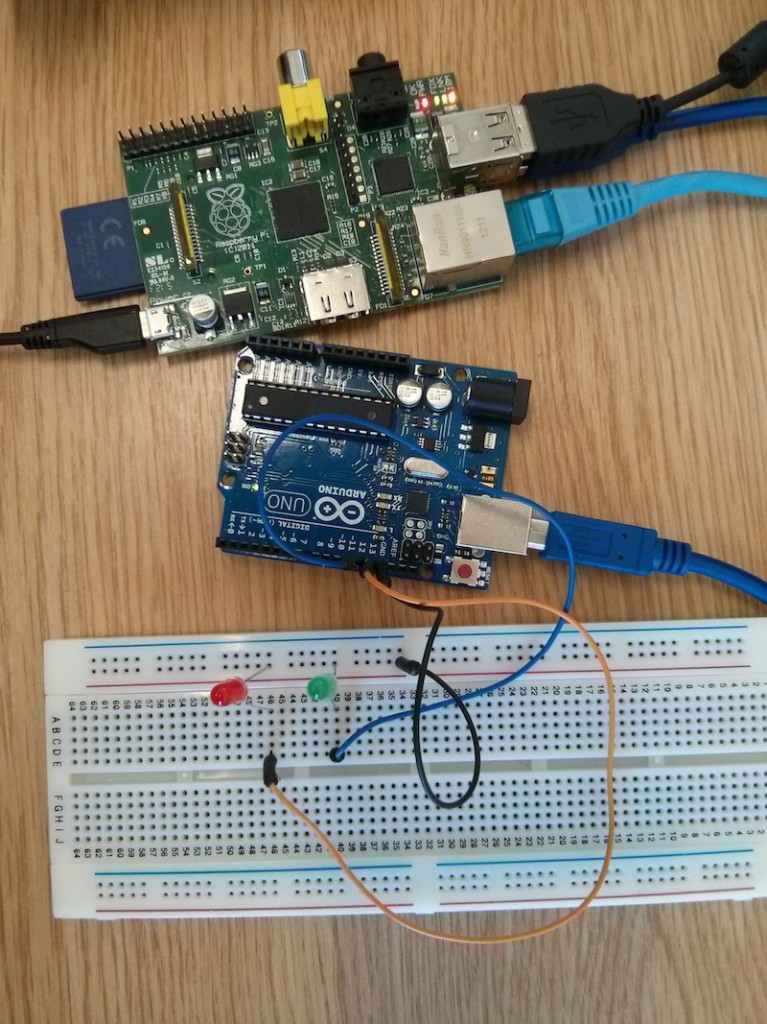

Last year we built the Middlesex Robotic Platform (MIRTO) using a Raspberry Pi and an Arduino Uno board. In our configuration the Arduino board is essentially a “slave” of the Raspberry Pi. A pair of HUB-ee wheel, infra-red sensors and bumper sensors are attached to the Arduino board, but all the “logic” is on the Raspberry Pi. It is the Raspberry Pi that sends the instructions to read the IR values and to set the speed of the wheels. In our configuration, the Arduino board is attached to the Raspberry Pi using a serial connection (GPIO pins on the Raspberry Pi and pin 0 and 1 on the Arduino). In MIRTO we installed Firmata on the Arduino board and we extended Firmata clients available on the Raspberry Pi. More precisely, we had to extend Firmata with specific messages for wheels (both directions: to set the speed and to read quadrature encoders) and for all the other extensions we wanted to use, such as sonar distance sensors. I think that protocols of this kind can open a lot of opportunities, by allowing the integration of platforms such as Raspberry Pi and possible multiple Arduino boards.

Last year we built the Middlesex Robotic Platform (MIRTO) using a Raspberry Pi and an Arduino Uno board. In our configuration the Arduino board is essentially a “slave” of the Raspberry Pi. A pair of HUB-ee wheel, infra-red sensors and bumper sensors are attached to the Arduino board, but all the “logic” is on the Raspberry Pi. It is the Raspberry Pi that sends the instructions to read the IR values and to set the speed of the wheels. In our configuration, the Arduino board is attached to the Raspberry Pi using a serial connection (GPIO pins on the Raspberry Pi and pin 0 and 1 on the Arduino). In MIRTO we installed Firmata on the Arduino board and we extended Firmata clients available on the Raspberry Pi. More precisely, we had to extend Firmata with specific messages for wheels (both directions: to set the speed and to read quadrature encoders) and for all the other extensions we wanted to use, such as sonar distance sensors. I think that protocols of this kind can open a lot of opportunities, by allowing the integration of platforms such as Raspberry Pi and possible multiple Arduino boards.

However, we soon realised that Firmata is not as easy to extend as we wanted, in particular it may be difficult to add one of the many available Arduino libraries for things such as NeoPixels etc.. We also found the 7-bit message encoding of Firmata messages error prone (in the sense that our code had a lot of bugs :-). For these reasons, Michael Margolis suggested the implementation of a new, simpler protocol. We wanted the protocol to be text-based and to allow easy integration of new services. We have now a working implementation of this protocol that we called the Arduino Service Interface Protocol (ASIP). Michael has developed the Arduino code, which is available at this link:

We have then written client libraries for:

- Java: https://github.com/fraimondi/java-asip

- Racket: https://github.com/fraimondi/racket-asip

- Erlang: https://github.com/ngorogiannis/erlang-asip (thanks to Nikos Gorogiannis)

(and we are working at a Python implementation).

The current implementation uses serial communication, but Michael Margolis has designed the protocol so that it can be very easily adapted to any form of streaming communication.

OK, let’s see some practical details. First of all, a quick overview of the installation process:

- Download the code available at https://github.com/michaelmargolis/asip; you will see that there are two directories: documents/ and asip/

- Copy the directory asip/ (not documents) to the library folder of your Arduino IDE. If you don’t know where this folder is, check Manual Installation at this link http://arduino.cc/en/Guide/Libraries

- Restart your Arduino IDE. At this point, if you click File -> Examples, you should see an asip menu. Select AsipIO to install a simple ASIP Input/Output configuration. This will allow you control digital and analog pins.

- Connect an Arduino board, upload the code, open the serial monitor and check that you are receiving regular message of the form

@I,A,{...}(these are the analog values of analog pins).

If everything is OK on the Arduino side, you can now play with one of the libraries available. As an example, I’m going to show you how to use the Java library available at https://github.com/fraimondi/java-asip. Clone the repository and open one of the examples, for instance src/uk/ac/mdx/cs/asip/examples/SimpleBlink.java. The code is the following:

package uk.ac.mdx.cs.asip.examples; import uk.ac.mdx.cs.asip.AsipClient; import uk.ac.mdx.cs.asip.SimpleSerialBoard; /* * @author Franco Raimondi * * A simple board with just the I/O services. * The main method does a standard blink test. * We extend SimpleSerialBoard but this is not * strictly required for this example. */ public class SimpleBlink extends SimpleSerialBoard { public SimpleBlink(String port) { super(port); } public static void main(String[] args) { // You need to provide the serial port to which Arduino // is connected. Under Win this could be COM3 or COM4, // check the Arduino IDE to find out. SimpleBlink testBoard = new SimpleBlink("/dev/tty.usbmodem1411"); // We need a try/catch block to sleep the thread. try { // This is a simple setu-up: we request // port mapping for digital ports (not strictly // necessary). Thread.sleep(1000); testBoard.requestPortMapping(); Thread.sleep(500); // We then set pin 13 to OUTPUT mode and pin 2 // to input (pull-up) mode, even if pin 2 will // not be used and therefore we could skip this. testBoard.setPinMode(13, AsipClient.OUTPUT); Thread.sleep(500); testBoard.setPinMode(2, AsipClient.INPUT_PULLUP); Thread.sleep(500); } catch (InterruptedException e) { e.printStackTrace(); } while(true) { // As above, we need a try/catch block to sleep. // Then, we just loop forever, turning on and off // a LED attached to pin 13. try { testBoard.digitalWrite(13, AsipClient.HIGH); Thread.sleep(2000); testBoard.digitalWrite(13, AsipClient.LOW); Thread.sleep(500); } catch (InterruptedException e) { e.printStackTrace(); } } } } |

The code should be pretty self-explanatory. It subclasses a class called SimpleSerialBoard (but this is not strictly necessary), then sets up the pin modes and loops forever writing HIGH and LOW to pin 13. You can explore other methods by opening the files LightSwitch.java and Potentiometer.java, showing how to read digital and analog input pins.

Before discussing how to add additional services, let’s have a look at the format of ASIP messages. Messages are ASCII messages, terminated by an end-of-line character. An example message to the Arduino board is I,P,13,1 where I is a character identifying a service (in this case, the Input/Output service), followed by an instruction to that service (in this case, P, meaning setting the value of a digital pin), followed by the pin number and by the number to be written.

Messages from the Arduino board are initiated by a special character: @ starts a standard message, ~ starts an error message, and ! starts debug/reporting messages. After this character, the message has a structure similar to the one above: a service ID, followed by a service-specific character, and then a list of parameters.

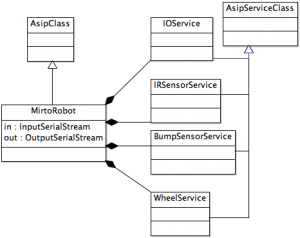

The Java library simply abstracts from these messages and provides easy to remember method names. The library creates a reading thread to manage incoming messages. This structure is the same in the Racket and Erlang clients. The key notion of ASIP is the one of a service. Example of services are a distance service, a motor service, a NeoPixel LED strip service, etc. Each service is identified by a single-character ID. For instance, a motor service has ID ‘M’, while a distance sensor has ID ‘D’, etc. If you want to implement a new service, you need to do two things:

- Implement code for the Arduino board.

- Implement code for a client library.

As an example of how to implement a new service, switch to branch neopixels on https://github.com/michaelmargolis/asip/ and open the file asip/utility/asipNeoPixels.h. This is the header file to define a new service to control a strip of NeoPixels LEDs. As you can see from the header file, we are defining a new class asipNeoPixelsClass that is a subclass of asipServiceClass. The new class needs to implement some abstract method of the superclass. In particular, it should define how to process messages directed to this class. Open the implementation asipNeoPixelsClass.cpp and check the method processRequestMsg: it shows how to process messages to set brightness, change the colour of a pixel, and show the LED strip. You decide what to implement here, and how. So far, we have IDs for specific operations that we want the service to perform, followed by optional parameters, all in the form of comma-separated values.

After defining this new service class, you should write Arduino code to instantiate this new service. For an example, open the file examples/AsipNeoPixels/AsipNeoPixels.ino. This file creates a firmware with support for standard I/O service, for a distance service attached to pin 4, and for two NeoPixels strips attached to pins 6 and 9. Essentially, this code creates the appropriate services, adds them to array of services to be added to the main loop, sets up the pins appropriately, and then loops by invoking the method service of AsipClass. This is the method that keeps reading incoming messages and dispatches them to the registered services, and also sends data from services to the serial port.

Upload the code above to the board and at this point the board should be ready to use: just send messages to the serial port with the appropriate ID and requests, and you will see pixels turning on and off. You can do this from the serial monitor, if you just want to test things. If you want to define a corresponding Java service for java-asip, have a look at the file src/uk/ac/mdx/cs/asip/services/NeoPixelService.java at https://github.com/fraimondi/java-asip/. This class extends a generic AsipService class and provides methods to read/write messages for NeoPixels services. As an example of application, check the file src/uk/ac/mdx/cs/asip/examples/SimpleNeoPixelWithDistance.java: the file shows how to initialise a board with all the required services and how to use the methods provided by NeoPixelService.java.

We have published a tool paper about ASIP, you can find it here:

- Mirco Bordoni, Michele Bottone, Bob Fields, Nikos Gorogiannis, Michael Margolis, Giuseppe Primiero, Franco Raimondi, Towards Cyber-Physical Systems as Services: the ASIP Protocol

As usual, drop me an email of leave a comment if you have questions..